publications

2024

-

Automatic Differential Diagnosis using Transformer-Based Multi-Label Sequence ClassificationAbu Adnan Sadi, Mohammad Ashrafuzzaman Khan, and Lubaba Binte SaberarXiv preprint arXiv:2408.15827, 2024

Automatic Differential Diagnosis using Transformer-Based Multi-Label Sequence ClassificationAbu Adnan Sadi, Mohammad Ashrafuzzaman Khan, and Lubaba Binte SaberarXiv preprint arXiv:2408.15827, 2024As the field of artificial intelligence progresses, assistive technologies are becoming more widely used across all industries. The healthcare industry is no different, with numerous studies being done to develop assistive tools for healthcare professionals. Automatic diagnostic systems are one such beneficial tool that can assist with a variety of tasks, including collecting patient information, analyzing test results, and diagnosing patients. However, the idea of developing systems that can provide a differential diagnosis has been largely overlooked in most of these research studies. In this study, we propose a transformer-based approach for providing differential diagnoses based on a patient’s age, sex, medical history, and symptoms. We use the DDXPlus dataset, which provides differential diagnosis information for patients based on 49 disease types. Firstly, we propose a method to process the tabular patient data from the dataset and engineer them into patient reports to make them suitable for our research. In addition, we introduce two data modification modules to diversify the training data and consequently improve the robustness of the models. We approach the task as a multi-label classification problem and conduct extensive experiments using four transformer models. All the models displayed promising results by achieving over 97% F1 score on the held-out test set. Moreover, we design additional behavioral tests to get a broader understanding of the models. In particular, for one of our test cases, we prepared a custom test set of 100 samples with the assistance of a doctor. The results on the custom set showed that our proposed data modification modules improved the model’s generalization capabilities. We hope our findings will provide future researchers with valuable insights and inspire them to develop reliable systems for automatic differential diagnosis.

@article{sadi2024automatic, title = {Automatic Differential Diagnosis using Transformer-Based Multi-Label Sequence Classification}, author = {Sadi, Abu Adnan and Khan, Mohammad Ashrafuzzaman and Saber, Lubaba Binte}, journal = {arXiv preprint arXiv:2408.15827}, year = {2024}, doi = {https://doi.org/10.48550/arXiv.2408.15827}, google_scholar_id = {2osOgNQ5qMEC}, demo = {https://huggingface.co/spaces/AdnanSadi/Differential-Diagnosis-Tool} } -

A Comparative Study on Plant Diseases Using Object Detection ModelsAbu Adnan Sadi, Ziaul Hossain, Ashfaq Uddin Ahmed, and 1 more authorIn Science and Information Conference . The peer-reviewed and final version of the paper is available here , 2024

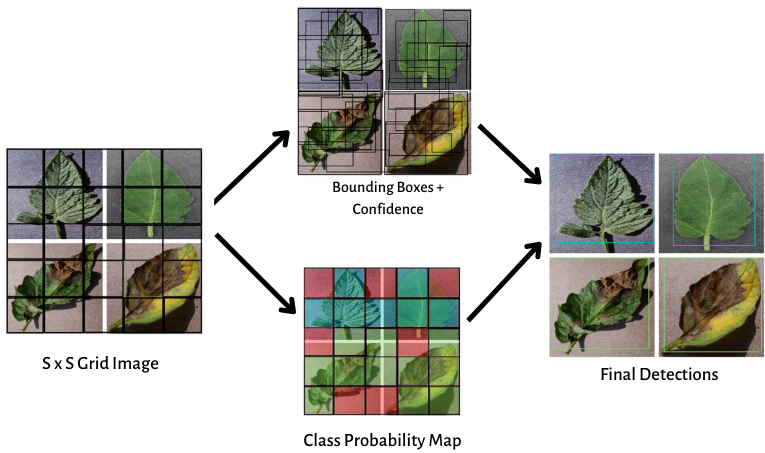

A Comparative Study on Plant Diseases Using Object Detection ModelsAbu Adnan Sadi, Ziaul Hossain, Ashfaq Uddin Ahmed, and 1 more authorIn Science and Information Conference . The peer-reviewed and final version of the paper is available here , 2024Plant diseases are the most widespread and significant hazard to ‘Precision Agriculture’. With early detection and analysis of diseases, the successful yield of cultivation can be increased; therefore, this process is regarded as a critical event. Unfortunately, manual observation-based detection method is error-prone, hard, and costly. Automation in identifying plant diseases is extremely beneficial because it saves time and manpower. Applying a neural network-based solution can detect disease symptoms at an early stage and facilitate the process of taking preventive or reactive measures. There have been various deep learning-based solutions, which were developed using lengthy training/testing cycles with large datasets. This study aims to investigate the suitability of computer vision-based approaches for this purpose. A comparative study has been performed using recently proposed object detection models such as YOLOv5, YOLOX, Scaled Yolov4, and SSD. A tailored version of the “PlantVillage” and “PlantDoc” datasets was used in the Indian sub-continent context, which included plant disease classes related to Potato, Corn, and Tomato plants. This study provides a detailed comparison between these object detection models and summarizes the suitability of these models for different cases. This paper can be useful for prospective researchers to decide which object detection models could be used for a specific scenario of Plant Disease Detection.

@inproceedings{sadi2024comparative, title = {A Comparative Study on Plant Diseases Using Object Detection Models}, author = {Sadi, Abu Adnan and Hossain, Ziaul and Ahmed, Ashfaq Uddin and Shad, Md Tazin Morshed}, booktitle = {Science and Information Conference}, pages = {419--438}, year = {2024}, organization = {Springer}, doi = {https://doi.org/10.1007/978-3-031-62269-4_29}, google_scholar_id = {9yKSN-GCB0IC}, }

2023

-

An end-to-end pollution analysis and detection system using artificial intelligence and object detection algorithmsMd Yearat Hossain, Ifran Rahman Nijhum, Md Tazin Morshed Shad, and 3 more authorsDecision Analytics Journal. Dataset can be found here , 2023

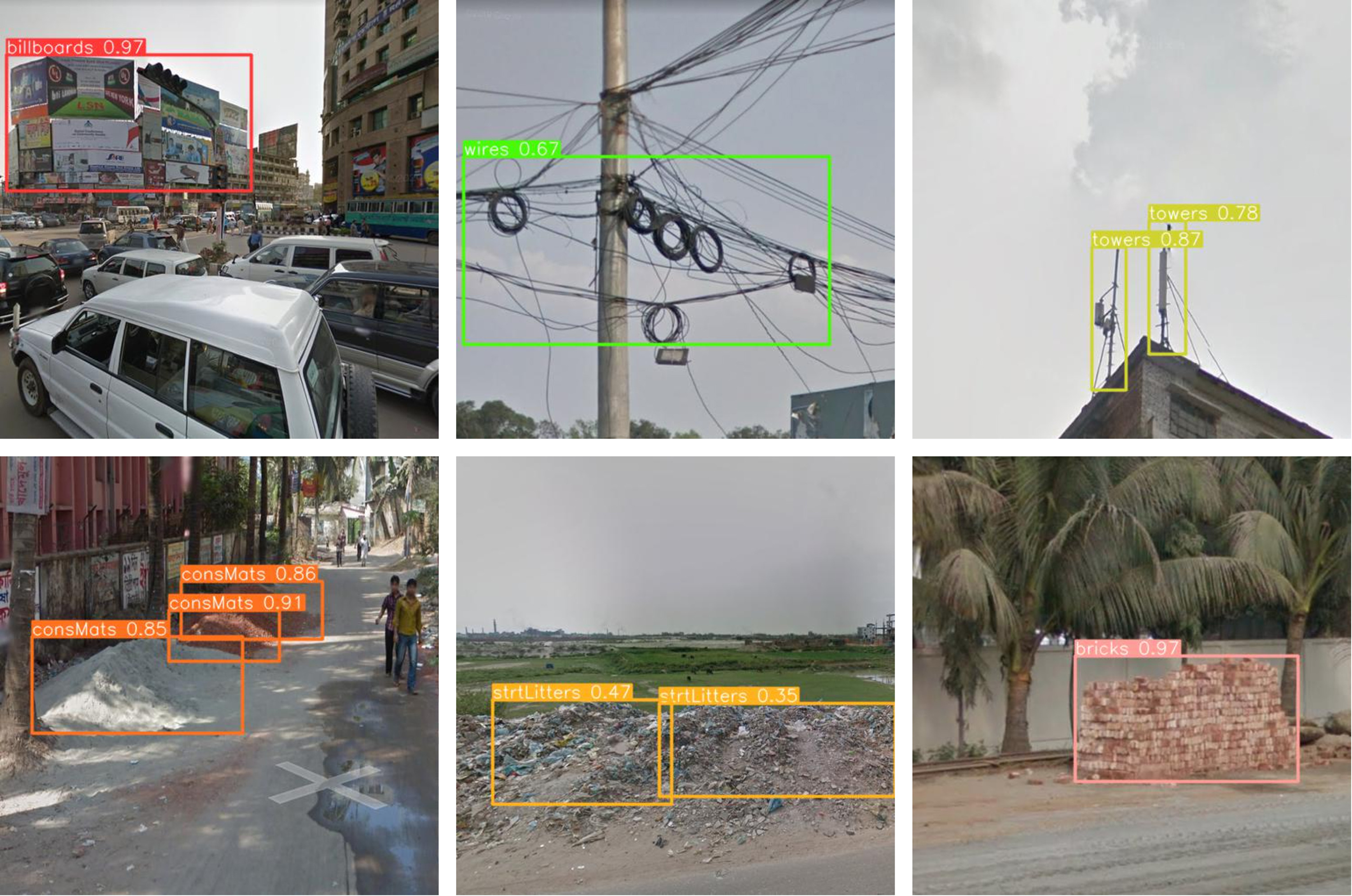

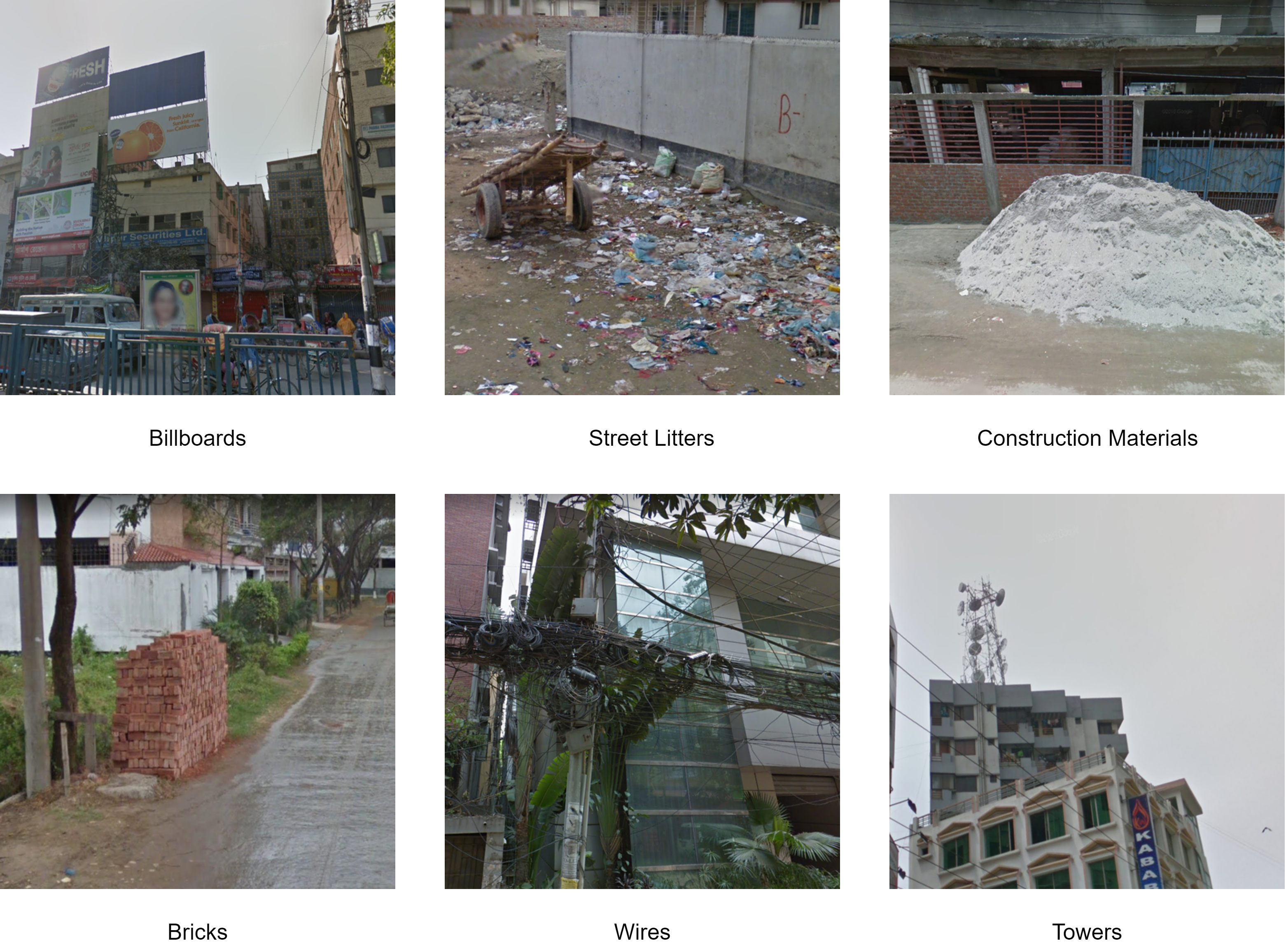

An end-to-end pollution analysis and detection system using artificial intelligence and object detection algorithmsMd Yearat Hossain, Ifran Rahman Nijhum, Md Tazin Morshed Shad, and 3 more authorsDecision Analytics Journal. Dataset can be found here , 2023Environmental pollution is generally a by-product of various human activities. Researchers have studied the dangers and harmful effects of pollutants and environmental pollution for centuries, and many necessary steps have been taken. Modern solutions are being constantly developed to tackle these issues efficiently. Visual pollution analysis and detection is a relatively less studied subject, even though it significantly impacts our daily lives. Building automatic pollution or pollutants detection systems has become increasingly popular due to the modern development of advanced artificial intelligence systems. Although some advances have been made, automated pollution detection is not well-researched or fully understood. This study demonstrates how various object detection models could identify such environmental pollutants and how end-to-end applications can analyze the findings. We trained our dataset on three popular object detection models, YOLOv5, Faster R-CNN (Region-based Convolutional Neural Network), and EfficientDet, and compared their performances. The best Mean Average Precision (mAP) score of 0.85 was achieved by the You Only Look Once (YOLOv5) model using its inbuilt augmentation techniques. Then we built a minimal Android application, using which volunteers or authorities could capture and send images along with their Global Positioning System (GPS) coordinates that might contain visual pollutants. These images and coordinates are stored in the cloud and later used by our local server. The local server utilizes the best-trained visual pollution detection model. It generates heat maps of particular locations, visualizing the condition of visual pollution based on the data stored in the cloud. Along with the heat map, our analysis system provides visual analytics like bar charts and pie charts to summarize a region’s condition. In addition, we used Active Learning and Incremental Learning methods to utilize the newly collected dataset by building a semi-autonomous annotation and model upgrading system. This also addresses the data scarcity problem associated with further research on visual pollution.

@article{vispol2023, title = {An end-to-end pollution analysis and detection system using artificial intelligence and object detection algorithms}, author = {Hossain, Md Yearat and Nijhum, Ifran Rahman and Shad, Md Tazin Morshed and Sadi, Abu Adnan and Peyal, Md Mahmudul Kabir and Rahman, Rashedur M}, journal = {Decision Analytics Journal}, volume = {8}, pages = {100283}, year = {2023}, publisher = {Elsevier}, doi = {https://doi.org/10.1016/j.dajour.2023.100283}, google_scholar_id = {u5HHmVD_uO8C}, }

2022

-

LMFLOSS: A Hybrid Loss For Imbalanced Medical Image ClassificationAbu Adnan Sadi, Labib Chowdhury, Nusrat Jahan, and 4 more authorsarXiv preprint arXiv:2212.12741, 2022

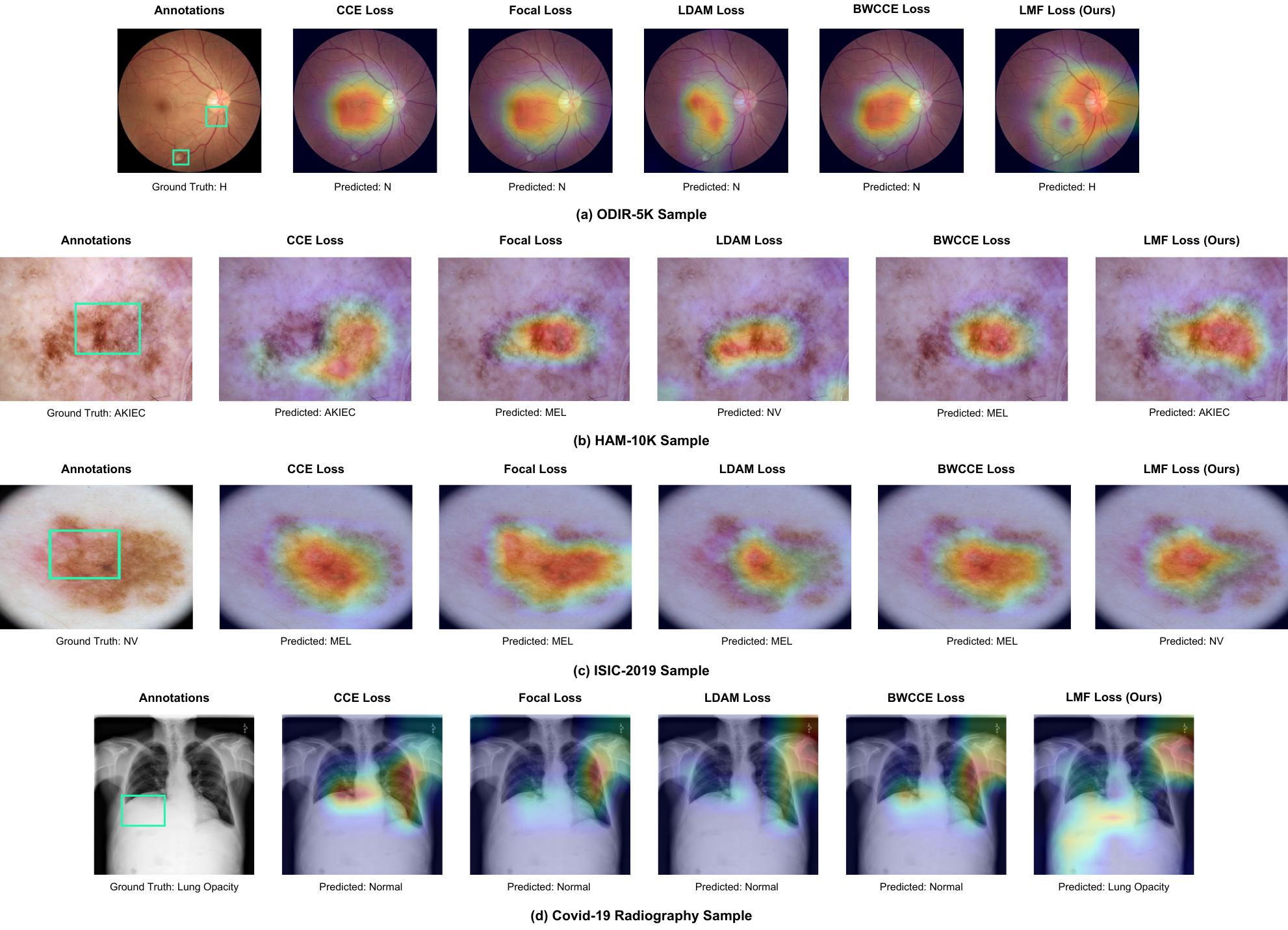

LMFLOSS: A Hybrid Loss For Imbalanced Medical Image ClassificationAbu Adnan Sadi, Labib Chowdhury, Nusrat Jahan, and 4 more authorsarXiv preprint arXiv:2212.12741, 2022Automatic medical image classification is a very important field where the use of AI has the potential to have a real social impact. However, there are still many challenges that act as obstacles to making practically effective solutions. One of those is the fact that most of the medical imaging datasets have a class imbalance problem. This leads to the fact that existing AI techniques, particularly neural network-based deep-learning methodologies, often perform poorly in such scenarios. Thus this makes this area an interesting and active research focus for researchers. In this study, we propose a novel loss function to train neural network models to mitigate this critical issue in this important field. Through rigorous experiments on three independently collected datasets of three different medical imaging domains, we empirically show that our proposed loss function consistently performs well with an improvement between 2%-10% macro f1 when compared to the baseline models. We hope that our work will precipitate new research toward a more generalized approach to medical image classification.

@article{LMFloss2022, title = {LMFLOSS: A Hybrid Loss For Imbalanced Medical Image Classification}, author = {Sadi, Abu Adnan and Chowdhury, Labib and Jahan, Nusrat and Rafi, Mohammad Newaz Sharif and Chowdhury, Radeya and Khan, Faisal Ahamed and Mohammed, Nabeel}, year = {2022}, journal = {arXiv preprint arXiv:2212.12741}, doi = {https://doi.org/10.48550/arXiv.2212.12741}, google_scholar_id = {u-x6o8ySG0sC}, }

2021

-

Visual Pollution Detection Using Google Street View and YOLOMd Yearat Hossain, Ifran Rahman Nijhum, Abu Adnan Sadi, and 2 more authorsIn 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON) . Dataset can be found here , 2021

Visual Pollution Detection Using Google Street View and YOLOMd Yearat Hossain, Ifran Rahman Nijhum, Abu Adnan Sadi, and 2 more authorsIn 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON) . Dataset can be found here , 2021In recent years, visual pollution has become a major concern in rapidly rising cities. This research deals with detecting visual pollutants from the street images collected using Google Street View. For this experiment, we chose the streets of Dhaka, the capital city of Bangladesh, to build our image dataset, mainly because Dhaka was ranked recently as one of the most polluted cities in the world. However, the methods shown in this study can be applied to images of any city around the world and would produce close to a similar output. Throughout this study, we tried to portray the possible utilisation of Google Street View in building datasets and how this data can be used to solve environmental pollution with the help of deep learning. The image dataset was created manually by taking screenshots from various angles of every street view with visual pollutants in the frame. The images were then manually annotated using CVAT and were fed into the model for training. For the detection, we have used the object detection model YOLOv5 to detect all the visual pollutants present in the image. Finally, we evaluated the results achieved from this study and gave direction of using the outcome from this study in different domains.

@inproceedings{vispol2021, title = {Visual Pollution Detection Using Google Street View and YOLO}, author = {Hossain, Md Yearat and Nijhum, Ifran Rahman and Sadi, Abu Adnan and Shad, Md Tazin Morshed and Rahman, Rashedur M}, year = {2021}, booktitle = {2021 IEEE 12th Annual Ubiquitous Computing, Electronics \& Mobile Communication Conference (UEMCON)}, pages = {0433--0440}, organization = {IEEE}, doi = {10.1109/UEMCON53757.2021.9666654}, google_scholar_id = {d1gkVwhDpl0C}, }